RAID-0:

------------

- RAID-0 offers pure disk striping.

- It does not provide either data redundancy or parity protection.

- In fact, RAID-0 is the only RAID level focusing solely on performance.

- Some vendors, such as EMC, do not consider level 0 as true RAID and do not offer solutions based on it.

- Pure RAID-0 significantly lowers MTBF, since it is highly prone to downtime.

- If any disk in the array (across which Oracle files are striped) fails, the database goes down.

RAID-1:

------------

- With RAID-1, all data is written onto two independent disks (a "disk pair") for complete data protection and redundancy.

- RAID-1 is also referred to as disk mirroring or disk shadowing.

- Data is written simultaneously to both disks to ensure that writes are almost as fast as to a single disk.

- During reads, the disk that is the least busy is utilized.

- RAID-1 is the most secure and reliable of all levels due to full 100-percent redundancy.

- However, the main disadvantage from a performance perspective is that every

write has to be duplicated.

- Nevertheless, read performance is enhanced, as the read can come from either

disk. RAID-1 demands a significant monetary investment to duplicate each disk;

- however, it provides a very high Mean time between failures (MTBF). Combining RAID level 0 and 1 (RAID-0+1) allows data to be striped across an array, in addition to mirroring each disk in the array.

RAID-0 & RAID-1:

--------------------------

- If RAID/0 is then combined with RAID/1 (mirroring) this then provides the resilience, but at a cost of having to double the number of disk drives in the configuration.

- There is another benefit in some RAID/1 software implementations in that the requested data is always returned from the least busy device.This can account for a further increase in performance of over 85% compared to the striped, no n-mirrored configuration.

- Write performance on the other hand has to go to both pieces of the software mirror. If this second mirror piece is on a second controller (as would normally be recommended for controller resilience), this degradation can be as low as 4 percent.

RAID-3:

------------

- In a RAID 3 configuration, a single drive is dedicated to storing error correction or parity data. Information is striped across the remaining drives.

- RAID/3 dramatically reduces the level of concurrency that the disk subsystem can support (I/O's per second) to a comparable software mirrored solution. The worst case for a system using RAID/3, would be an OLTP environment, where the number of rapid transactions is numerous and response time is critical.

- So to put it simply, if the environment is mainly read only (Eg Decision Support) RAID/3 provides disk redundancy with read performance slightly improved, but at the cost of write performance. Unfortunately, even decision support databases still do a significant amount of disk writing since complex joins, unique searches etc still do temporary work, thus involving disk writing.

RAID-5:

------------

- Instead of total disk mirroring, RAID-5 computes and writes parity for every write operation. The parity disks avoid the cost of full duplication of the disk drives of RAID-1.

- If a disk fails, parity is used to reconstruct data without system loss. Both data and parity are spread across all the disks in the array, thus reducing disk bottleneck problems.

- Read performance is improved, but every write has to incur the additional overhead of reading old parity, computing new parity, writing new parity, and then writing the actual data, with the last two operations happening while two disk drives are simultaneously locked. This overhead is notorious as the RAID-5 write penalty. This write penalty can make writes significantly slower.

- Also, if a disk fails in a RAID-5 configuration, the I/O penalty incurred during the disk rebuild is extremely high. Read-intensive applications (DSS, data warehousing) can use RAID-5 without major real-time performance degradation (the write penalty would still be incurred during batch load operations in DSS applications).

- In terms of storage, however, parity constitutes a mere 20-percent overhead, compared to the 100-percent overhead in RAID-1 and 0+1.

- Initially, when RAID-5 technology was introduced, it was labeled as the cost-effective panacea for combining high availability and performance. Gradually, users realized the truth, and until about a couple of years ago, RAID-5 was being regarded as the villain in most OLTP shops. Many sites contemplated getting rid of RAID-5 and started looking at alternative solutions.

- RAID 0+1 gained prominence as the best OLTP solution for people who could afford it. Over the last two years, RAID-5 is making a comeback either as hardware-based RAID-5 or as enhanced RAID-7 or RAID-S implementations. However, RAID-5 evokes bad memories for too many OLTP database architects.

RAID-S:

-------------

- RAID S is EMC's implementation of RAID-5. However, it differs from pure RAID-5 in two main aspects:

(1) It stripes the parity, but it does not stripe the data.

(2) It incorporates an asynchronous hardware environment with a write cache. This cache is primarily a mechanism to defer writes, so that the overhead of calculating and writing parity information can be done by the system, while it is relatively less busy (and less likely to exasperate the user!). Many users of RAID-S imagine that since RAID-S is supposedly an enhanced version of RAID-5, data striping is automatic. They often wonder how they are experiencing I/O bottlenecks, in spite of all that striping. It is vital to remember that in RAID-S, striping of data is not automatic and has to be done manually via third-party disk-management software.

RAID-7:

------------

RAID-7 also implements a cache, controlled by a sophisticated built-in real-time operating system. Here, however, data is striped and parity is not. Instead, parity is held on one or more dedicated drives. RAID-7 is a patented architecture of Storage Computer Corporation.

2. Pro's and Cons of Implementing RAID technology

----------------------------------------------------------------------------

There are benefits and disadvantages to using RAID, and those depend on the RAID level under consideration and the specific system in question.

In general, RAID level 1 is most useful for systems where complete redundancy of data is a must and disk space is not an issue. For large datafiles or systems with less disk space, this RAID level may not be feasible. Writes under this level of RAID are no faster and no slower than 'usual'. For all other levels of RAID, writes will tend to be slower and reads will be faster than under 'normal' file systems. Writes will be slower the more frequently ECC's are calculated and the more complex those ECC's are. Depending on the ratio of reads to writes in your system, I/O speed may have a net increase or a net decrease. RAID can improve performance by distributing I/O, however, since the RAID controller spreads data over several physical drives and therefore no single drive is overburdened.

The striping of data across physical drives has several consequences besides balancing I/O. One additional advantage is that logical files may be created which are larger that the maximum size usually supported by an operating system. There are disadvantages, as well, however. Striping means that it is no longer possible to locate a single datafile on a specific physical drive. This may cause the loss of some application tuning capabilities. Also, in Oracle's case, it can cause database recovery to be more time-consuming. If a single physical disk in a RAID array needs recovery, all the disks which are part of that logical RAID device must be involved in the recovery. One additional note is that the storage of ECC's may require up to 20% more disk space than would storage of data alone, so there is some disk overhead involved with usage of RAID.

3. RAID and Oracle

----------------------------

The usage of RAID is transparent to Oracle. All the features specific to RAID configuration are handled by the operating system and go on behind- the-scenes as far as Oracle is concerned. Different Oracle file-types are suited differently for RAID devices. Datafiles and archive logs can be placed on RAID devices, since they are accessed randomly. The database is sensitive to read/write performance of the Redo Logs and should be on a RAID 1 , RAID 0+1 or no RAID at all since they are accessed sequentially and performance is enhanced in their case by having the disk drive head near the last write location. Keep in mind that RAID 0+1 does add overhead due to the 2 physical I/O's. Mirroring of redo log files, however,is strongly recommended by Oracle. In terms of administration, RAID is far simple than using Oracle techniques for data placement and striping.

Recommendations:

In general, RAID usually impacts write operations more than read operation.This is specially true where parity need to be calculated (RAID 3, RAID 5, etc). Online or archived redo log files can be put on RAID 1 devices. You should not use RAID 5. 'TEMP' tablespace data files should also go on RAID1 instead of RAID5 as well. The reason for this is that streamed write performance of distributed parity (RAID5) isn't as good as that of simple mirroring (RAID1).

Swap space can be used on RAID devices without affecting Oracle.

=================================================================================

RAID Type of RAID Control Database Redo Log Archive Log

File File File File

=================================================================================

0 Striping Avoid* OK* Avoid* Avoid*

-----------------------------------------------------------------------------------------------------------------------------------------------------------------

1 Shadowing OK OK Recommended Recommended

-----------------------------------------------------------------------------------------------------------------------------------------------------------------

0+1 Striping + OK Recommended OK Avoid

Shadowing (1)

-----------------------------------------------------------------------------------------------------------------------------------------------------------------

3 Striping with OK Avoid Avoid Avoid

Static Parity (2)

-----------------------------------------------------------------------------------------------------------------------------------------------------------------

5 Striping with OK Avoid Avoid Avoid

Rotating Parity (2)

-----------------------------------------------------------------------------------------------------------------------------------------------------------------

* RAID 0 does not provide any protection against failures. It requires a strong backup strategy.

(1) RAID 0+1 is recommended for database files because this avoids hot spots and gives the best possible performance during a disk failure. The disadvantage of RAID 0+1 is that it is a costly configuration.

(2) When heavy write operation involves this datafile

Thursday, June 28, 2012

RAID (Redundant Arrays of Inexpensive Disks) in Oracle

Wednesday, June 27, 2012

How to change length of a column in all tables with the same column name

Suppose, there is a column named "USERNAME" in some tables with varying length size 12 bytes to 50 bytes. You need to change the length of less than 40 bytes in all tables to 40 bytes only for the column "USERNAME"

-------- Query to find tables whose length is less than 40 bytes-----------------------------------------

select owner, table_name, column_name, data_type, data_length from dba_tab_columns

where owner not in ('SYSMAN', 'OLAPSYS', 'SYSTEM', 'SYS') ---- this line to exclude system tables

and column_name='USERNAME'

and data_length<40

and table_name in (select table_name from dba_tables) ---- this line to include only tables, not view

--------- Prepare a script to revert back to original length------------------------------------------------

select 'ALTER TABLE '||owner||'.'||table_name||' MODIFY(USERNAME VARCHAR2('||data_length||' BYTE));' from dba_tab_columns

where owner not in ('SYSMAN', 'OLAPSYS', 'SYSTEM', 'SYS')

and column_name='USERNAME'

and data_length<40

and table_name in (select table_name from dba_tables)

--------- Prepare a script to change the length to 40 Bytes-------------------------------------------------

select 'ALTER TABLE '||owner||'.'||table_name||' MODIFY(USERNAME VARCHAR2(40 BYTE));' from dba_tab_columns

where owner not in ('SYSMAN', 'OLAPSYS', 'SYSTEM', 'SYS')

and column_name='USERNAME'

and data_length<40

and table_name in (select table_name from dba_tables)

Exadata

The Exadata products address the three key dimensions relating

performance.

- More pipes to deliver more data faster

- Wider pipes that provide extremely high bandwidth

- Ship just the data required to satisfy SQL requests

There are two members of the Oracle

Exadata product family.

- HP Oracle

Exadata Storage Server.

- HP Oracle

Database Machine.

- Also known as Exadata

Cell

- Running the Exadata Storage Server Software provided

by Oracle.

-

- The Exadata cell comes-preconfigured with:

- two Intel 2.66 Ghz quad-core processors,

- twelve disks connected to a smart array storage

controller with 512K of non-volatile cache,

- 8 GB memory,

- dual port InfiniBand(16 Gigabits of bandwidth)

connectivity,

- management card for remote access,

- redundant power supplies,

- all the software preinstalled, and

- Can be installed in a typical 19-inch rack.

-

- Two versions of

exadata cell are offered:

- The first is based on

- 450 GB Serial Attached SCSI (SAS) drives.

- 1.5 TB of

uncompressed user data capacity, and

- up to 1

GB/second of data bandwidth.

- The second version of the Exadata cell is based on-

- 1 TB Serial Advanced Technology Attachment (SATA)

drives and

- 3.3 TB of uncompressed user data capacity, and

- Up to 750

MB/second data bandwidth.

-

- No cell-to-cell communication is ever done or required

- A rack can contain up to eighteen Exadata

cells.

- The peak data throughput for the SAS based

configuration would be 18 GB/second.

- If additional storage capacity is required, add more

racks with Exadata cells to scale to any required bandwidth or capacity level.

- Once a new rack is connected, the new Exadata disks

can be discovered by the Oracle Database and made available.

- Data is mirrored across cells to ensure

that the failure of a cell will not cause loss of data, or inhibit data

accessibility.

-

- SQL processing is offloaded from the database server

to the Oracle Exadata Storage Server.

-

- All features of the Oracle database are fully

supported with Exadata.

- Exadata

works equally well with single-instance or RAC.

- Functionality like Data Guard, Recovery Manager

(RMAN), Streams, and other database tools are administered the same, with or

without Exadata.

-

- Users and database administrator leverage the same

tools and knowledge they are familiar with today because they work just as they

do with traditional non-Exadata storage.

- Both Exadata

and non-Exadata storage may be concurrently used for database storage to

facilitate migration to, or from, Exadata storage.

-

-

Exadata also has been integrated with the Oracle Enterprise Manager (EM) Grid Control by installing an

Exadata plug-in to the existing EM system

-

The Oracle Storage Server Software resident in the Exadata cell runs

under OEL. OEL is accessible in a restricted mode to administer and manage the

Exadata cell.

-

The Oracle Database 11g has been significantly enhanced to take

advantage of Exadata storage.

-

The Exadata software is optimally divided between the database server

and Exadata cell.

-

Two versions of Database Machine –

-

Database Machine Full Rack or

-

Database Machine Half Rack –

-

-

Fourteen Exadata Storage

Servers

(either SAS or SATA)

-

Eight HP ProLiant DL360 G5 Oracle Database 11g database servers

(dual-socket quad-core Intel® 2.66 Ghz processors), with 32 GB RAM, four 146 GB

SAS drives, dual port InfiniBand Host Channel Adapter (HCA), dual 1 Gb/second

Ethernet ports, and redundant power supplies)

-

Ethernet switch for communication from the Database Machine to database

clients.

-

Keyboard, Video or Visual Display Unit, Mouse (KVM) hardware for local

administration of the system

-

-

Using SAS-based Exadata storage cells up to 21 TB data capacity and up

to 14 GB/second of I/O bandwidth.

-

Using SATA-based Exadata storage cells up to 46 TB capacity and up to

10.5 GB/second of I/O bandwidth.

-

Half of all components of Full Rack configuration.

-

The database server and

Exadata Storage Server Software communicate using the iDB – the Intelligent Database protocol. iDB is implemented in the

database kernel and transparently maps database operations to Exadata-enhanced

operations.

-

iDB is used to ship SQL

operations down to the Exadata cells for execution and to return query result

sets to the database kernel. Instead of returning database blocks Exadata cells

return only the rows and columns that satisfy the SQL query.

-

-

Like existing I/O protocols,

iDB can also directly read and write ranges of bytes to and from disk so when

offload processing is not possible Exadata operates like a traditional storage

device for the Oracle database.

-

CELLSRV (Cell Services) is

the primary component of the Exadata software running in the cell and provides

the majority of Exadata storage services. CELLSRV

is multi-threaded software that communicates with the database instance on the

database server, and serves blocks to databases based on the iDB

protocol. It provides

-

the advanced SQL offload capabilities,

-

serves Oracle blocks when SQL offload processing is not possible, and

-

Implements the DBRM I/O resource management functionality to meter out

I/O bandwidth to the various databases and consumer groups issuing I/O.

-

If a cell dies during a smart

scan, the uncompleted portions of the smart scan are transparently routed to

another cell for completion.

-

SQL

EXPLAIN PLAN shows when Exadata smart scan is used.

-

The

Oracle Database and Exadata cooperatively execute various SQL statements.

-

Two

other database operations that are offloaded to Exadata are incremental

database backups and tablespace creation.

-

The

Database Resource Manager (DBRM) feature in Oracle Database 11g has been

enhanced for use with Exadata. DBRM lets the

user define and manage intra and inter-database I/O bandwidth in addition to

CPU, undo, degree of parallelism, active sessions, and the other resources it

manages.

-

An

Exadata administrator can create a resource plan that specifies how I/O requests should be

prioritized. This

is accomplished by putting the different types of work into service groupings

called Consumer

Groups.

Consumer groups can be defined by username, client program name, function, or

length of time the query has been running. The user can set a hierarchy of

which consumer group gets precedence in I/O resources and how much of the I/O

resource is given to each consumer group.

-

Automatic Storage Management

(ASM) is used to manage the storage in the Exadata cell.

-

ASM

provides data protection against drive and cell failures

-

A Cell Disk is the virtual representation of the physical disk, minus

the System Area LUN (if present)

-

A Cell

Disk is represented by a single LUN, which is created and managed automatically by the Exadata software when the

physical disk is discovered.

-

Cell

Disks can be further virtualized into one or more Grid Disks.

-

It is also possible to partition a Cell Disk into multiple Grid Disk slices.

-

Example:

o

Once the Cell Disks and Grid

Disks are configured, ASM disk groups are defined across the Exadata

configuration.

o

Two ASM disk groups are defined,

one across the “hot” grid disks, and a second across the “cold” grid disks.

o

All of the “hot” grid disks are

placed into one ASM disk group and all the “cold” grid disks are placed in a

separate disk group.

o

When the data is loaded into the

database, ASM will evenly distribute the data and I/O within the disk groups.

o

ASM

mirroring can be activated for these disk groups to protect against disk

failures for both, either, or neither of the disk groups.

o

Mirroring

can be turned on or off independently for each of the disk groups.

-

Lastly,

to protect against the failure of an entire Exadata cell, ASM failure groups

are defined. Failure groups ensure that mirrored ASM extents are placed on

different Exadata cells.

-

A single database can be

partially stored on Exadata storage and partially on traditional storage

devices.

-

Tablespaces can reside on Exadata

storage, non-Exadata storage, or a combination of the two, and is transparent

to database operations and applications. But to benefit from the Smart Scan

capability of Exadata storage, the entire tablespace must reside on Exadata

storage. This co-residence and co-existence is a key feature to enable online

migration to Exadata storage.

-

Online migration can be done if

the existing database is deployed on ASM and is using ASM redundancy.

-

Migration can be done using

Recovery Manager (RMAN)

-

Data Guard can also be used to

facilitate a migration.

-

All these approaches provide a

built in safety net as you can undo the migration very gracefully if unforeseen

issues arise.

-

With

the Exadata architecture, all single points of failure are eliminated. Familiar

features such as mirroring, fault isolation, and protection against drive and

cell failure have been incorporated into Exadata to ensure continual

availability and protection of data.

-

Hardware

Assisted Resilient Data (HARD) built into Exadata

o

Designed to prevent data

corruptions before they happen.

o

Provides higher levels of protection

and end-to-end data validation for your data.

o

Exadata

performs extensive validation of the data including checksums, block locations,

magic numbers, head and tail checks, alignment errors, etc.

o

Implementing these data

validation algorithms within Exadata will prevent corrupted data from being

written to permanent storage.

o

Furthermore,

these checks and protections are provided without the manual steps required

when using HARD with conventional storage.

-

Flashback

o

The Flashback feature works in

Exadata the same as it would in a non-Exadata environment.

-

Recovery

Manager (RMAN)

o

All

existing RMAN scripts work unchanged in the Exadata environment

Thursday, June 21, 2012

VirtualBox - How to create ASM Disks

1. Shutdown Virtual machine:

# shutdown -h now

2. from Windows command prompt:

mkdir E:\VirtualBox\VM\harddisks (E:\VirtualBox is virtualbox home)

You need to change your VirtualBox directory accordingly

You need to change your VirtualBox directory accordingly

3. run as below from command prompt:

cd E:\VirtualBox\VM\harddisks

# Create the disks and associate them with VirtualBox as virtual media.

# Each disk is 5 GB size, total 25 GB

E:\VirtualBox\VM\harddisks>E:\VirtualBox\VBoxManage createhd --filename asm1.vdi --size 5120 --format VDI --variant Fixed

E:\VirtualBox\VM\harddisks>E:\VirtualBox\VBoxManage createhd --filename asm2.vdi --size 5120 --format VDI --variant Fixed

E:\VirtualBox\VM\harddisks>E:\VirtualBox\VBoxManage createhd --filename asm3.vdi --size 5120 --format VDI --variant Fixed

E:\VirtualBox\VM\harddisks>E:\VirtualBox\VBoxManage createhd --filename asm4.vdi --size 5120 --format VDI --variant Fixed

E:\VirtualBox\VM\harddisks>E:\VirtualBox\VBoxManage createhd --filename asm5.vdi --size 5120 --format VDI --variant Fixed

# Connect them to the VM

$ E:\VirtualBox\VBoxManage storageattach Linux --storagectl "SATA Controller" --port 1 --device 0 --type hdd --medium asm1.vdi --mtype shareable

$ E:\VirtualBox\VBoxManage storageattach Linux --storagectl "SATA Controller" --port 2 --device 0 --type hdd --medium asm2.vdi --mtype shareable

$ E:\VirtualBox\VBoxManage storageattach Linux --storagectl "SATA Controller" --port 3 --device 0 --type hdd --medium asm3.vdi --mtype shareable

$ E:\VirtualBox\VBoxManage storageattach Linux --storagectl "SATA Controller" --port 4 --device 0 --type hdd --medium asm4.vdi --mtype shareable

$ E:\VirtualBox\VBoxManage storageattach Linux --storagectl "SATA Controller" --port 5 --device 0 --type hdd --medium asm5.vdi --mtype shareable

# Make shareable

$ E:\VirtualBox\VBoxManage modifyhd asm1.vdi --type shareable

$ E:\VirtualBox\VBoxManage modifyhd asm2.vdi --type shareable

$ E:\VirtualBox\VBoxManage modifyhd asm3.vdi --type shareable

$ E:\VirtualBox\VBoxManage modifyhd asm4.vdi --type shareable

$ E:\VirtualBox\VBoxManage modifyhd asm5.vdi --type shareable

# Connect them to the VM

$ E:\VirtualBox\VBoxManage storageattach Linux --storagectl "SATA Controller" --port 1 --device 0 --type hdd --medium asm1.vdi --mtype shareable

$ E:\VirtualBox\VBoxManage storageattach Linux --storagectl "SATA Controller" --port 2 --device 0 --type hdd --medium asm2.vdi --mtype shareable

$ E:\VirtualBox\VBoxManage storageattach Linux --storagectl "SATA Controller" --port 3 --device 0 --type hdd --medium asm3.vdi --mtype shareable

$ E:\VirtualBox\VBoxManage storageattach Linux --storagectl "SATA Controller" --port 4 --device 0 --type hdd --medium asm4.vdi --mtype shareable

$ E:\VirtualBox\VBoxManage storageattach Linux --storagectl "SATA Controller" --port 5 --device 0 --type hdd --medium asm5.vdi --mtype shareable

# Make shareable

$ E:\VirtualBox\VBoxManage modifyhd asm1.vdi --type shareable

$ E:\VirtualBox\VBoxManage modifyhd asm2.vdi --type shareable

$ E:\VirtualBox\VBoxManage modifyhd asm3.vdi --type shareable

$ E:\VirtualBox\VBoxManage modifyhd asm4.vdi --type shareable

$ E:\VirtualBox\VBoxManage modifyhd asm5.vdi --type shareable

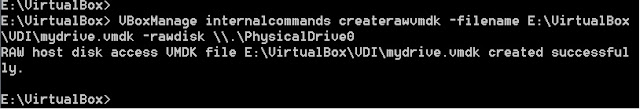

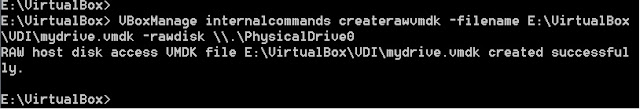

Virtualbox - Access Entire Physical Disk from VirtualBox

1. From Windows, Open Command prompt as Admin

2. Go to VirtualBox installation location:

E:\VirtualBox

3. Run below command from command prompt:

VBoxManage internalcommands createrawvmdk -filename E:\VirtualBox\VDI\mydrive.vmdk -rawdisk \\.\PhysicalDrive0

2. Go to VirtualBox installation location:

E:\VirtualBox

3. Run below command from command prompt:

VirtualBox - Guest Addition Installation

1. Log into the VM as the root user and add the "divider=10" option to the kernel boot options in "/etc/grub.conf" file to reduce the idle CPU load. The entry should look something like this.

2. From VirtualBox Menu Bar, select Devices -> Install Guest Additions

3. Double click the CD-ROM to mount Guest Additions

4. Open a terminal, go to Guest Addition location and run as below:

sh ./VBoxLinuxAdditions.run

5. Reboot Linux

# grub.conf generated by anaconda

#

# Note that you do not have to rerun grub after making changes to this file

# NOTICE: You have a /boot partition. This means that

# all kernel and initrd paths are relative to /boot/, eg.

# root (hd0,0)

# kernel /vmlinuz-version ro root=/dev/VolGroup00/LogVol00

# initrd /initrd-version.img

#boot=/dev/sda

default=0

timeout=5

splashimage=(hd0,0)/grub/splash.xpm.gz

hiddenmenu

title Enterprise Linux (2.6.18-194.el5)

root (hd0,0)

kernel /vmlinuz-2.6.18-194.el5 ro root=/dev/VolGroup00/LogVol00 rhgb quiet divider=10

initrd /initrd-2.6.18-194.el5.img

2. From VirtualBox Menu Bar, select Devices -> Install Guest Additions

3. Double click the CD-ROM to mount Guest Additions

4. Open a terminal, go to Guest Addition location and run as below:

sh ./VBoxLinuxAdditions.run

5. Reboot Linux

Wednesday, June 20, 2012

Oracle Database Replication (From RMAN Backup)

1. Take a Full RMAN backup at Source system and do FTP from source to Test

2. @TEST: set oracle SID as the same name as Source Database

3. $ rman target / nocatalog

4. RMAN> set dbid 1234567890 (DBID of Source database)

5. start Oracle Instance as below (pfile parameters need to be adjusted according to Memory size )

RMAN> startup nomount pfile='/app/u01/oracle/product/10.2.0/db_1/dbs/initorcl.ora'

6. Restore control file from backup location, assuming control file was backed up with RMAN Full backup

RMAN> restore controlfile from '/app/u03/rman_backup/rman_level0_weekly_130112/controlfile_dbn0l8ll_1_1';

2. @TEST: set oracle SID as the same name as Source Database

3. $ rman target / nocatalog

4. RMAN> set dbid 1234567890 (DBID of Source database)

5. start Oracle Instance as below (pfile parameters need to be adjusted according to Memory size )

RMAN> startup nomount pfile='/app/u01/oracle/product/10.2.0/db_1/dbs/initorcl.ora'

6. Restore control file from backup location, assuming control file was backed up with RMAN Full backup

RMAN> restore controlfile from '/app/u03/rman_backup/rman_level0_weekly_130112/controlfile_dbn0l8ll_1_1';

7. Mount the database after restoring control file:

RMAN> alter database mount

8. Catalog the Backup location:

RMAN> catalog start with '/app/u03/rman_backup/rman_level0_weekly_130112';

9. list incarnation of database;

reset database to incarnation 1;

[the parent id]

10. Exit from RMAN, go to RMAN_Restore script location and run Restore_RMAN.sh in background:

nohup ./Restore_RMAN.sh &

------- RMAN restore Script -------------------------------------------------------------------------

#!/bin/bash

ORACLE_BASE=/app/u01/oracle; export ORACLE_BASE;

ORACLE_HOME=$ORACLE_BASE/product/10.2.0/db_1; export ORACLE_HOME;

LD_LIBRARY_PATH=$ORACLE_HOME/lib:$LD_LIBRARY_PATH; export LD_LIBRARY_PATH;

#LD_LIBRARY_PATH_64=$ORACLE_HOME/lib; export LD_LIBRARY_PATH_64;

PATH=$PATH:$ORACLE_HOME/bin

export PATH

ORACLE_SID=orcl; export ORACLE_SID

TODAY=`date '+%Y%m%d_%H%M%S'`

LOG_FILE=/home/oracle/DBA_Scripts/RMAN/${ORACLE_SID}_full_restore.log

echo "ORCL full restore started at `date`" >$LOG_FILE

rman <<! >>$LOG_FILE

connect target /

run {

allocate channel ch1 device type disk;

allocate channel ch2 device type disk;

allocate channel ch3 device type disk;

allocate channel ch4 device type disk;

restore database;

release channel ch1;

release channel ch2;

release channel ch3;

release channel ch4;

}

!

echo "ORCL full restore finished at `date`" >>$LOG_FILE

---------------------------------------------------------------------------------------------------

11. After completion of Restore, find the highest SCN number and Recover Database accordingly with that SCN number:

SQL> select group#, to_char(first_change#), status, archived from v$log order by FIRST_CHANGE# desc;

RMAN> recover database until scn 9900929507575;

RMAN> alter database open resetlogs;

Subscribe to:

Comments (Atom)